Trustworthy SDLC: Redefining AI Product Development

In the rapidly evolving landscape of artificial intelligence (AI), traditional software development methodologies are being reexamined to address the unique challenges posed by AI systems. Synapsed’s Trustworthy Software Development Life Cycle (T-SDLC) offers a comprehensive framework tailored to ensure that AI products are developed responsibly, ethically, and securely.

By adopting the proposed Trustworthy SDLC framework, organizations can mitigate risks, enhance product quality, ensure regulatory compliance, and ultimately build AI systems that users can confidently rely on.

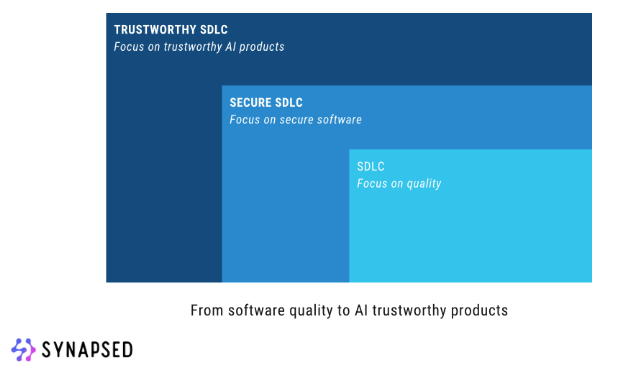

From SDLC to Trustworthy SDLC

Conventional Software Development Life Cycles (SDLCs), including Agile and Secure SDLCs, primarily focus on functional requirements and security concerns. However, AI systems introduce complexities such as algorithmic bias, data privacy issues, and susceptibility to adversarial attacks, which traditional SDLCs are not equipped to handle effectively.The T-SDLC framework addresses these gaps by integrating ethical considerations and trustworthiness into every phase of AI product development.

Introducing Trustworthy SDLC (T-SDLC)

The Trustworthy AI Software Development Life Cycle (T-SDLC), introduced by Synapsed Research expands on traditional software processes by embedding trust, ethics, and transparency at every phase, aligning closely with standards such as ISO/IEC 5338. Here’s a concise overview of the phases:

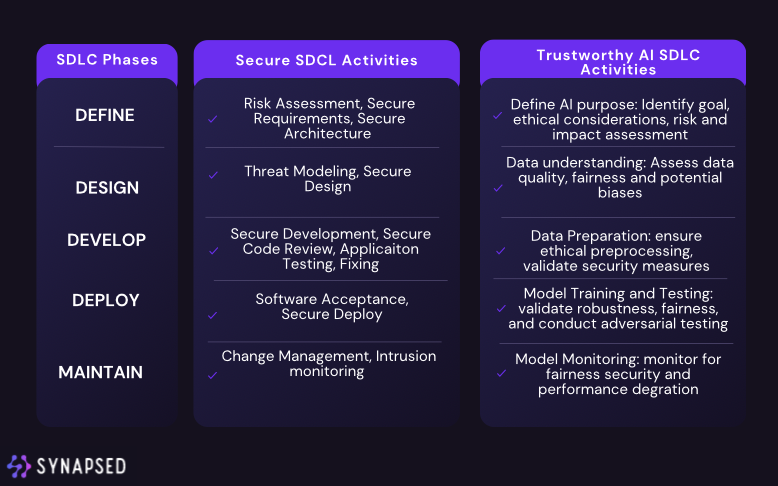

Phase 1: Define – Requirements & Ethical Planning

In the Define phase, stakeholders, including project managers, data scientists, and ethicists, collaboratively outline the AI system’s objectives, ethical constraints, and potential risks. Key activities include:

- Clearly documenting the AI’s purpose, scope, and ethical values.

- Conducting thorough risk assessments to identify potential ethical issues.

- Establishing explicit trustworthiness requirements (e.g., fairness metrics, explainability criteria).

- Ensuring stakeholder alignment on ethical goals, documented via an AI Ethics Charter or similar document.

Phase 2: Design – Secure and Transparent Architecture

The Design phase addresses architecture, model choice, and data handling. Trustworthiness here involves:

- Designing data collection strategies that mitigate bias, ensure quality, and protect privacy.

- Incorporating security measures (e.g., input validation, zero trust architectures).

- Conducting threat modeling to anticipate and mitigate AI-specific risks (e.g., data poisoning, adversarial attacks).

- Selecting algorithms aligned with fairness, robustness, and transparency objectives.

Phase 3: Development – Ethical Implementation & Rigorous Testing

During Development, implementation and thorough testing occur simultaneously to ensure ethical compliance:

- Adhering to secure coding practices, verifying the integrity of third-party libraries and dependencies.

- Implementing model training controls, such as adversarial training, to enhance robustness and reduce biases.

- Comprehensive testing including fairness audits, adversarial robustness testing, and interpretability validation.

- Iterative improvements based on testing outcomes to maintain ethical and functional standards.

Phase 4: Deployment – Transparent Release & Operational Controls

The Deployment phase emphasizes careful, transparent rollout and ongoing monitoring:

- Conducting final validation (user acceptance testing) in realistic conditions.

- Providing comprehensive documentation and transparency to users and stakeholders.

- Implementing controlled, phased rollouts with real-time monitoring for performance, bias, and security.

- Establishing strong operational security and incident response plans for quick mitigation of unforeseen issues.

Phase 5: Maintenance – Continuous Monitoring & Ethical Governance

Finally, the Maintenance phase ensures sustained trustworthiness through ongoing:

- Continuous performance and fairness monitoring to detect and correct drift.

- Regular model retraining and updates aligned with evolving data and requirements.

- Implementation of user feedback mechanisms and incident response procedures.

- Periodic ethical audits and transparent communication with users and stakeholders.

Adopting T-SDLC ensures organizations build AI systems that are not just powerful and innovative, but consistently aligned with ethical principles and societal expectations throughout their lifecycle.

Future Directions in Trustworthy AI Development

The T-SDLC framework proposed by Synapsed Research is not static; it evolves with advancements in AI and emerging ethical considerations. Future developments include:

- Enhanced AI Governance: Implementing roles such as AI Ethics Officers to oversee ethical compliance.

- Automated Ethical Compliance Tools: Developing tools that automatically detect and mitigate ethical risks during development.

- Improved Explainability: Advancing techniques that make AI decision-making processes more transparent to users and stakeholders.

- Dynamic Risk Management: Establishing real-time risk assessment dashboards to proactively manage potential issues.

- Human-AI Collaboration: Designing systems that facilitate effective collaboration between humans and AI, ensuring that human oversight remains integral.

Conclusion

The Trustworthy SDLC proposed by Synapsed Research represents a significant advancement in AI product development, ensuring that ethical considerations are not an afterthought but a foundational element throughout the development process. By adopting this framework, organizations can build AI systems that are not only innovative and efficient but also aligned with societal values and ethical standards.

For a more in-depth exploration of the Trustworthy SDLC framework, drop an email to: info@synapsed.ai in order to download the full white paper.

More on Synapsed services and products.

Related Work and References

- Royce, W. W. (1970). Managing the Development of Large Software Systems. Proceedings of IEEE WESCON, 26, 328-388.

https://www.praxisframework.org/files/royce1970.pdf

- McGraw, G. (2006) . Software Security: Building Security in. Addison-Wesley Professional.

- ISO/IEC 5338:2023. (2023) . Information technology — Artificial intelligence — AI system life cycle processes.

https://www.iso.org/standard/81118.html

- National Institute of Standards and Technology. (2023) . Artificial Intelligence Risk Management Framework (AI RMF 1.0).

https://www.nist.gov/itl/ai-risk-management-framework

- Maninger, D., Narasimhan, K., & Mezini, M. (2023) . Towards Trustworthy AI Software Development Assistance.

https://arxiv.org/pdf/2312.09126

- Baldassarre, M. T., Gigante, D., Kalinowski, M., & Ramos, R. (2024) . POLARIS: A Framework to Guide the Development of Trustworthy AI Systems.

https://arxiv.org/pdf/2402.05340

- Ahuja, M. K., Belaid, M., Bernabé, P., Collet, M., Gotlieb, A., Indhumathi, C., Lazreg, S., Mancini, L., Marijan, D., & Sen, S. (2020) . Opening the Software Engineering Toolbox for the Assessment of Trustworthy AI.

https://arxiv.org/abs/2007.07768

- European Commission. (2019) . Ethics Guidelines for Trustworthy AI.

https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai

- ISO 31700-1:2023 – Consumer protection — Privacy by design for consumer goods and services.

https://www.iso.org/standard/84977.html

- Deloitte. (2023) . Trustworthy AI™ Framework.

https://www2.deloitte.com/us/en/pages/deloitte-analytics/solutions/ethics-of-ai-framework.html

- TechTarget. (2023) . What is trustworthy AI and why is it important?

https://www.techtarget.com/searchenterpriseai/tip/What-is-trustworthy-AI-and-why-is-it-important

- National Institute of Standards and Technology. (2024) . NIST Special Publication 800-218A: Secure Software Development Practices for Generative AI.

https://csrc.nist.gov/news/2024/nist-publishes-sp-800218a

- Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., Luetge, C., Madelin, R., Pagallo, U., Rossi, F., Schafer, B., Valcke, P., & Vayena, E. (2018) . AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations. Minds and Machines, 28(4), 689-707.

https://doi.org/10.1007/s11023-018-9482-5

- Arrieta, A. B., Díaz-Rodríguez, N., Del Ser, J., Bennetot, A., Tabik, S., Barbado, A., García, S., Gil-López, S., Molina, D., Benjamins, R., Chatila, R., & Herrera, F. (2020) . Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion, 58, 82-115.

https://doi.org/10.1016/j.inffus.2019.12.012

- Amershi, S., Weld, D., Vorvoreanu, M., Fourney, A., Nushi, B., Collisson, P., Suh, J., Iqbal, S., Bennett, P. N., Inkpen, K., Teevan, J., Kikin-Gil, R., & Horvitz, E. (2019) . Guidelines for Human-AI Interaction. Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, 1-13.

https://doi.org/10.1145/3290605.3300233

- Mitchell, M., Wu, S., Zaldivar, A., Barnes, P., Vasserman, L., Hutchinson, B., Spitzer, E., Raji, I. D., & Gebru, T. (2019) . Model Cards for Model Reporting. Proceedings of the Conference on Fairness, Accountability, and Transparency, 220-229.